Can policy makers regulate AI technology without stifling it completely?

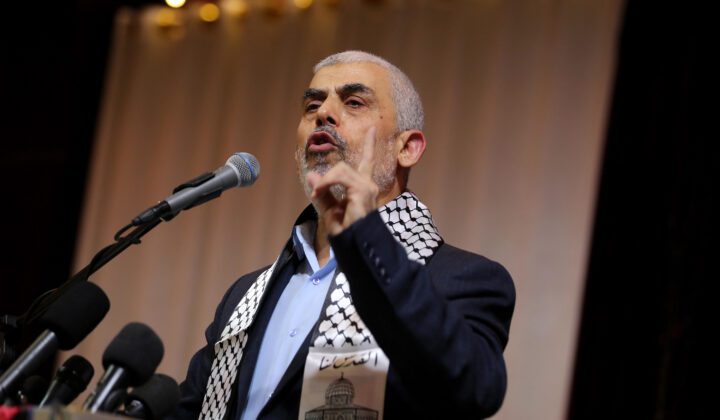

Last week, the Federal Communications Commission banned robocalls that use voices generated by artificial intelligence (AI). The action followed a series of calls placed in New Hampshire that attempted to dissuade people from voting in the state’s primary—using the replicated voice of President Joe Biden.

The incident in New Hampshire is one of the most egregious uses of AI generated deepfakes indirectly influencing an election. Unfortunately, this isn’t new. Deepfake technology has made headlines since at least 2019, when the tools were used to target then-Speaker of the House Nancy Pelosi, Rep. Alexandria Ocasio-Cortez of New York, and former President Donald Trump. AI has become so pervasive, it’s even found its way into comedy sketches.

But it’s not all in good fun. Experts are concerned about how AI will impact elections, creating a situation where false information is believed to be true, and true information is believed to be false. The possibilities scream for government regulation, but the challenge is to do it in a way that doesn’t stunt a sector with tremendous potential for human advancement. With the presidential election looming, the clock is ticking. —Melissa Amour, Managing Editor

AI’s Threat to Democracy

Just before a recent election in Slovakia, a fake audio recording in which a candidate boasted about rigging the election went viral. The incident raised alarms in the US, where it’s not difficult to envision a similar scenario playing out in an election year.

AI is already being used to craft highly targeted and believable phishing campaigns and can also be deployed in cyberattacks that compromise election security. None of these threats are new; we’ve seen similar activity in 2016 and 2020. But advancements in AI since those elections and the widespread availability of the technology can significantly aid bad actors in 2024.

The threats to democracy posed by AI are ubiquitous, and the government must implement safeguards against uses of AI that could intentionally deceive the public. The challenge is whether it can restrain the technology effectively without stifling it completely. It’s a tall order. Let’s take a look at where things stand.

What You Should Know

Proposed AI legislation thus far largely addresses generative AI, and protecting the public from manipulated content as it relates to personal privacy. To that end, a bipartisan group in Congress introduced the No Artificial Intelligence Fake Replicas And Unauthorized Duplications (No AI FRAUD) Act last month.

“[T]he No AI FRAUD Act, will punish bad actors using generative AI to hurt others—celebrity or not. Everyone should be entitled to their own image and voice and my bill seeks to protect that right.” —Rep. María Elvira Salazar of Florida

But hopes are not high that Congress will pass any AI-related legislation before the election, so states and cities are taking individual regulatory action. As of September, state legislatures had introduced 191 AI-related bills in 2023—a 440% increase over 2022.

“States being the laboratories of democracy, they can act a little bit more quickly…and some are, both through their boards or organizations of elections or through state legislation. But this is a space that we certainly have to come to some formal agreement on because again, it is about protecting democracy.” —US Rep. Marcus Molinaro of New York

The White House’s executive action on AI has been the most comprehensive of all, and the administration has released a blueprint for an AI Bill of Rights as well.

“The Order directed sweeping action to strengthen AI safety and security, protect Americans’ privacy, advance equity and civil rights, stand up for consumers and workers, promote innovation and competition, advance American leadership around the world, and more.” —White House AI Fact Sheet

But it’s a fine line. Lawmakers must craft regulation that doesn’t strangle innovation in their zeal to protect democracy, jobs, and humanity itself. In short, we don’t want to lose our competitive edge through over-regulation, like Europe, and allow competitors like China to take the lead.

How We Got Here

Our hopes and fears for the future of AI are fundamentally shaped by our recent experience with the rise of social media. It is an obvious analogy, similarly new, destabilizing, enduring, and to some extent inevitable. Many lawmakers on both sides of the aisle believe insufficient regulation of social media led to unintended consequences detrimental to democracy and the general well being of individuals.

“Because different sides see different facts [on social media], they share no empirical basis for reaching a compromise. Because each side hears time and again that the other lot are good for nothing but lying, bad faith, and slander, the system has even less room for empathy. Because people are sucked into a maelstrom of pettiness, scandal, and outrage, they lose sight of what matters for the society they share.” —The Economist

But on the other hand, the US is the undisputed leader in social media. The largest social media companies are headquartered in the US, creating jobs and generating economic growth. As a result, the US has marginally more control of the sector globally as the chief regulatory body.

“[T]he internet economy’s contribution to the US GDP grew 22 percent per year since 2016, in a national economy that grows between two to three percent per year.”—Interactive Advertising Bureau/Harvard Business School study

Tech innovation is at the forefront of modern American economic success.

“The seven largest US technology companies, often referred to as the ‘Magnificent Seven’ and comprising Alphabet Inc (Google), Amazon.com Inc, Apple Inc, Meta Platforms Inc, Microsoft Corp, Nvidia Corp, and Tesla Inc, boast a combined market value exceeding a staggering $12 trillion (€11 trillion). To put this in perspective, their value is almost equivalent to the combined gross domestic products of the four largest European economies: Germany, the United Kingdom, France, and Italy, which amount to $13 trillion.” —EuroNews

Government leaders are determined to generate safer outcomes with AI through greater engagement with tech companies, rather than relying on them to police themselves which has produced, at best, a spotty record. But as our experience with social media shows, regulation can—and should—only go so far, lest it diminish economic opportunity and US influence internationally.

What People Are Saying

One thing is certain: AI will change democracy, in the same way that previous technologies, like social media, the internet, and television, did before it. The challenge is how to regulate it to ensure those changes are largely advantageous, without stifling the development of a groundbreaking emergent technology. Some people are worried that proposed regulations could go too far.

“[P]re-emptive regulation can erect barriers to entry for companies interested in breaking into an industry. Established players, with millions of dollars to spend on lawyers and experts, can find ways of abiding by a complex set of new regulations, but smaller start-ups typically don’t have the same resources. This fosters monopolization and discourages innovation.” —Tim Wu, law professor at Columbia University and author of “The Curse of Bigness: Antitrust in the New Gilded Age.”

Meanwhile others’ fears about AI’s disruptive or even apocalyptic potential are driving calls for stringent measures.

“Bad actors using AI…represents a dire threat to the very core of democratic societies. As AI technology continues to advance, the potential for abuse grows, making it imperative to act decisively. Strengthening legal frameworks, promoting ethical AI, increasing public awareness, and fostering international cooperation are critical steps in combating this threat.” —Neil Sahota, IBM Master Inventor, United Nations AI advisor, and author of “Own the AI Revolution”

In the end, a balanced approach that minimizes harm but encourages innovation seems ideal. After all, AI could lead to overwhelming advances in human knowledge and ingenuity.

“The path ahead demands robust legal frameworks, respect for intellectual property, and stringent safety standards, akin to the meticulous oversight of nuclear energy. But beyond regulations, it requires a shared vision. A vision where technology serves humanity and innovation is balanced with ethical responsibility. We must embrace this opportunity with wisdom, courage, and a collective commitment to a future that uplifts all of humanity.” —Former US Rep. Will Hurd of Texas, who previously served as a board member of OpenAI

- AI, democracy, and disinformation: How to stay informed without being influenced by fake news —The DePaulia

- Inside OpenAI’s plan to make AI more ‘democratic’ —Time

- ‘One-size-fits-all’ approach not fit for deepfakes —Economic Times

- Opinion: Effective AI regulation requires understanding general-purpose AI —Brookings

- Opinion: Bridging the trust gap: Using AI to restore faith in democracy —Federal Times

- ‘Un-American’: Donald Trump threatens NATO, says he might ‘encourage’ Russian attack —USA Today

- Flipped seat in New York: Democrat Tom Suozzi wins special election to replace ousted US Rep. George Santos —NBC News

- Second time’s the charm: House Republicans impeach Homeland Security Secretary Alejandro Mayorkas in historic, controversial vote —ABC News

- Food for thought: Report shows 23.5M independent voters are disenfranchised in 2024 primaries —Unite America

- Give this a listen: Ukraine aid on the line, Tucker goes to Moscow —The Dispatch

- Holland: The challenge to Holland’s liberalism —The UnPopulist

- Hungary: Prime Minister Viktor Orbán faces political crisis after president’s resignation —PBS

- Lebanon: Israel launches strikes in Lebanon after rockets hit army base —BBC

- Russia: Warning from House Intel about Russia’s space power —Politico

- Ukraine: NATO’s Stoltenberg urges US House to pass Ukraine aid, says China watching —Reuters

Hey Topline readers, you remember the drill. We want to hear your reactions to today’s stories. We’ll include some of your replies in this space in our next issue of The Topline. Click here to share your take, and don’t forget to include your name and state. We’re looking forward to hearing from you!